Prismizer

Coding a prismizer audio plugin

If you enjoy electronic music, you might have encountered the audio effect known as the Prismizer or the Messina effect. It's essentially an harmonizer which is controllable by a keyboard in real time. Some notable usages of this effect are by Bon Iver and Jacob Collier.

My last year in uni corresponded to the time where I discovered Bon Iver's song Creeks, where he makes use of the Prismizer effect for the whole song. During my courses about Digital Signal Processing (DSP) or Analog Electronics, there would almost always be a point where I would just zone-out and start thinking about how you would implemenent that effect technically. As my Bachelor's finishing project, it happened to be that we were supposed to build an audio plugin, and I took this as an excuse to go ahead and give it a try myself. And well, in the end, I did have a working prototype that was good enough to get a pretty good score!

The code is not perfect by no means, and there is definitely room for improvement. Here, I want to give a general overview of how it works, and hopefully provide some guidance to other people who want to implement their own version of it. For people that want to see the code itself and the details of the used algorithms, here are a few links that can be useful:

Note: the current version of the code on Github does not exactly match with the version that was described in the paper. The biggest difference is that in the paper, I have implemented the pitch detection algorithm myself; but on the current version, I just went with a library that already does it for us. You can also see the difference of these versions in the demo video 1 and demo video 2.

How to start building an audio plugin?

If you are familiar with C++, you can use JUCE which is a free C++ framework that allows you to write audio apps and plugins by providing a lot of useful tools. It also allows you to build your code into standalone apps, and different plugin extensions. Depending on your learning style, you can either go to their documentation and follow their guides; or you can follow tutorials from people on Youtube. Personally, I kept finding myself doing both. Especially the Youtube channel Audio Programmer helped me quite a lot, and I would definitely recommend. Once you get yourself relatively comfortable with the workings of JUCE and understand the general principles and the main methods you will use, we can get to actually coding our plugin.

High level design

Essentially, a prismizer plugin consists of three parts:

- Pitch detection: detecting the pitch/frequency of the raw vocal input

- MIDI processing: detecting the MIDI input

- Pitch shifting: shifting the pitch of the vocal input to the one indicated by the MIDI input, and outputting it

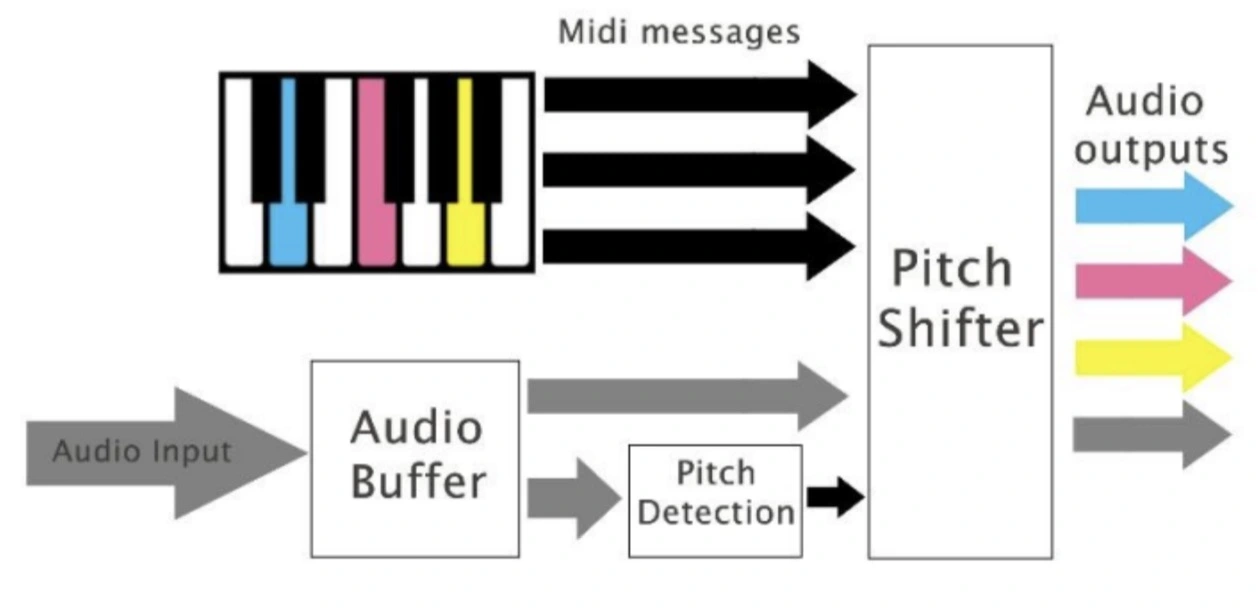

Figure 1 takes us through the high level design. The gray arrow indicates the raw vocal input (the audio signal) that comes in from the microphone, and is sent to our audio buffer. In parallel to this, the performer may be playing notes in their MIDI keyboard, which we also want to capture. The MIDI messages are indicated by the black arrows on the top. We can directly get information about the target frequencies looking at the MIDI messages. However, we also need to know the frequency of the vocal input so that we can calculate how much it needs to be shifted in order to hit the target notes. We will want to find a fast and accurate frequency detection algorithm for this. The last step is shifting the input vocal by the necessary amounts to get to those target pitches. And, again, for this, we want to find a fast and accurate pitch shiting algorithm. By the end of this procudure, we can finally hear the raw vocal input + the harmonies of the input in the notes that were played in the MIDI keyboard. Keep in mind, this is the cycle that will happen each time the audio buffer is filled with enough samples. This means that if we are using a buffer size of 2048 samples, and a sample rate of 41kHz/s, the buffer will be filled about every 50ms, and we will then start processing the audio samples for that 50ms.

Pitch detection

At first, I implemented my own pitch detection algirthm using the famous "Fast Fourier Transform" algorithm. For those who don't know, Fourier Transform is an algorithm -which is very well studied and optimized- that takes in an audio signal, and outputs the frequency spectrum of the given audio signal. In other words, it shows us how much of each frequency the audio signal contains. What is important to know about this algorithm is that in it's "discrete form" (that is to say the version where the input signal is not a continuous signal but a discrete one, which is always the case for digital systems), the number of output samples are the same as the number of input samples. This means, more sample we give to it, more precise the output frequency spectrum will be. Since we want the pitch detection to happen as real-time as possible, this forces us to consider a few extra things in our implementation. If we want high accuracy in the pitch detection, we need to give it a lot of samples, meaning we would have to wait for all those samples to be collected, which means the latency will be higher. Thankfully, there are actually methods to overcome this problem one of which is overlapping the windows sample we give to the algorithm.

Anyways, I won't dive into the details of the algorithm and the implementation too much. Those of you who want to read and learn about it can find all sorts of useful articles and videos about it online (here is one actually). The conclusion is, after I implemented the improvements and the optimizations I could, I realised it would make more sense to use an already built and optimized library for this task, and I went with the open-source aubio library.

MIDI Processing

The main premise of the Prismizer effect is to be able to manually control the notes of the harmonies in real time. For this, we are using a MIDI input. Since we know the pitch of the raw vocal input, if we can get the MIDI inputs correctly, we can determine by how much we need to "pitch-shift" the original audio signal to create the harmonies. Here's a link to a JUCE guide to see how you can handle MIDI data. Once you get the MIDI number of the given notes, there must be a lot of ways to get the corresponding frequency of the MIDI note. The way I did it was literally defining an array where the value of the each index corresponds to the frequency of the MIDI note. Then, we simply get the pitch-shift factor by dividing the MIDI note frequency by the vocal input frequency. We can do this for each detected MIDI note for a polyphonic effect. Again, you can just checkout the linked Github repo to see how exactly I implemented things.

Besides getting the note of each MIDI note, another cool thing we can do is: we can get the velocity (aka the strength) of the MIDI notes so we can make that specific harmony loud or quiet to give a more natural feeling to the effect.

Pitch Shifting

Now that we know the original vocal's pitch, and we know by how much we need to shift it, all we have to do is... well, actually shift it and create the harmonies. Again, there are multiple methods to pitch shift a given audio signal, and each have their own advantages and disadvantages. The main decision is whether to do it in the time domain or the frequency domain. There is a more general discussion on this on the linked paper, but again, for this one too, after struggling with implementing a pitch-shift algortihm based on the Inverse Fourier Transform on Matlab for days, I ended up using the free and open-source SoundTouch library. Once you set it up, it's actually quite simple to use. In my implementation, I create 6 instances of the SoundTouch object so that I can play up to 6 notes on my MIDI keyboard and add all those harmonies in a polyphonic manner.

Conclusion

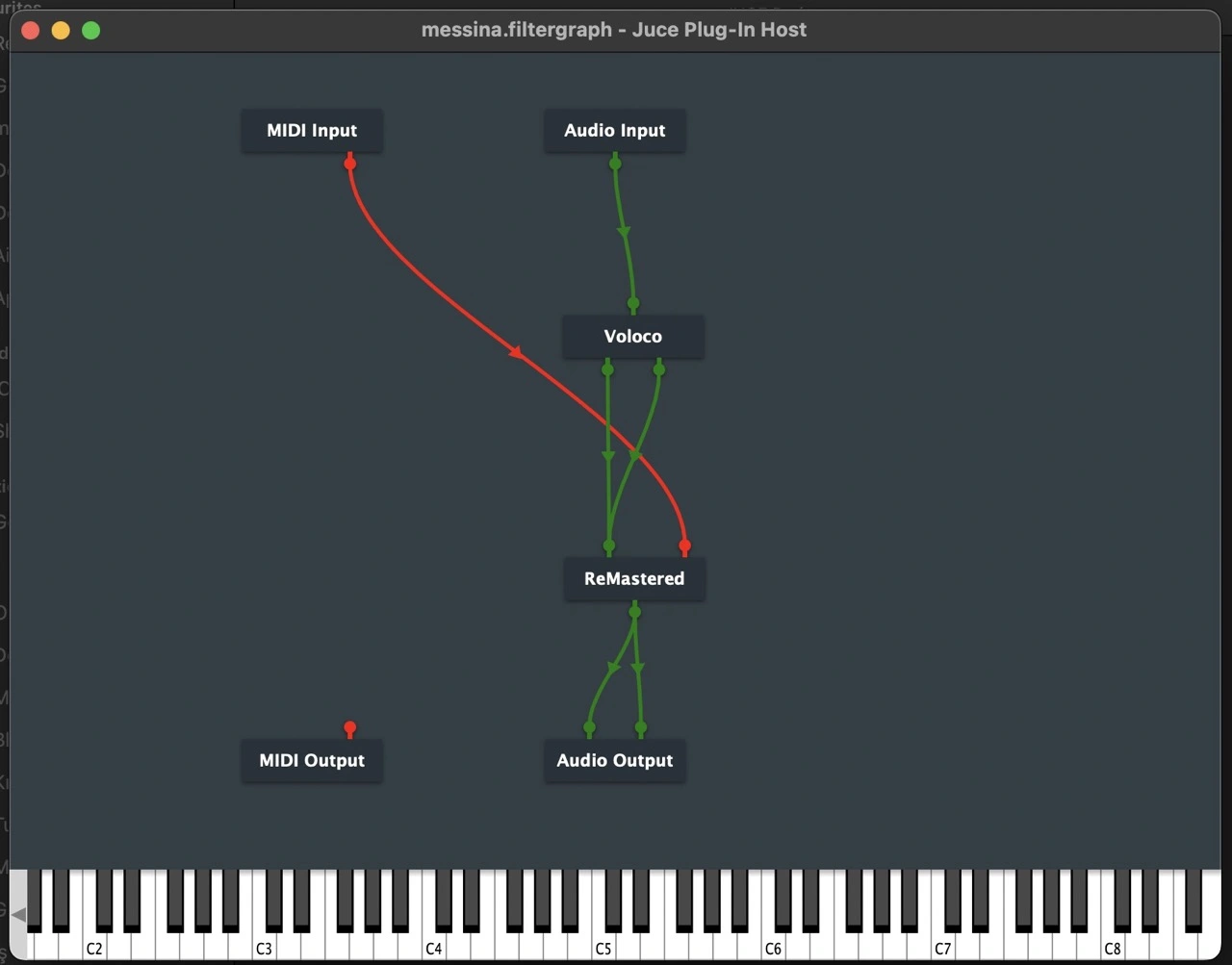

We went over the high level design of how to build a Prismizer audio plugin. Before I end the article, a quick tip: when you are actually building the plugin (or any other plugin using JUCE), the AudioPluginHost app that comes as one of the example projects of JUCE can be super helpful for testing your plugin. Again, for more detailed information on the mentioned algorithms, libraries, and frameworks you can refer to the links I left in the article.

I hope this article was helpful for some people out there, have a great rest of your day and good luck! :)